Many E-commerce brands were losing sales due to costly photoshoots, slow editing cycles, and inconsistent imagery.

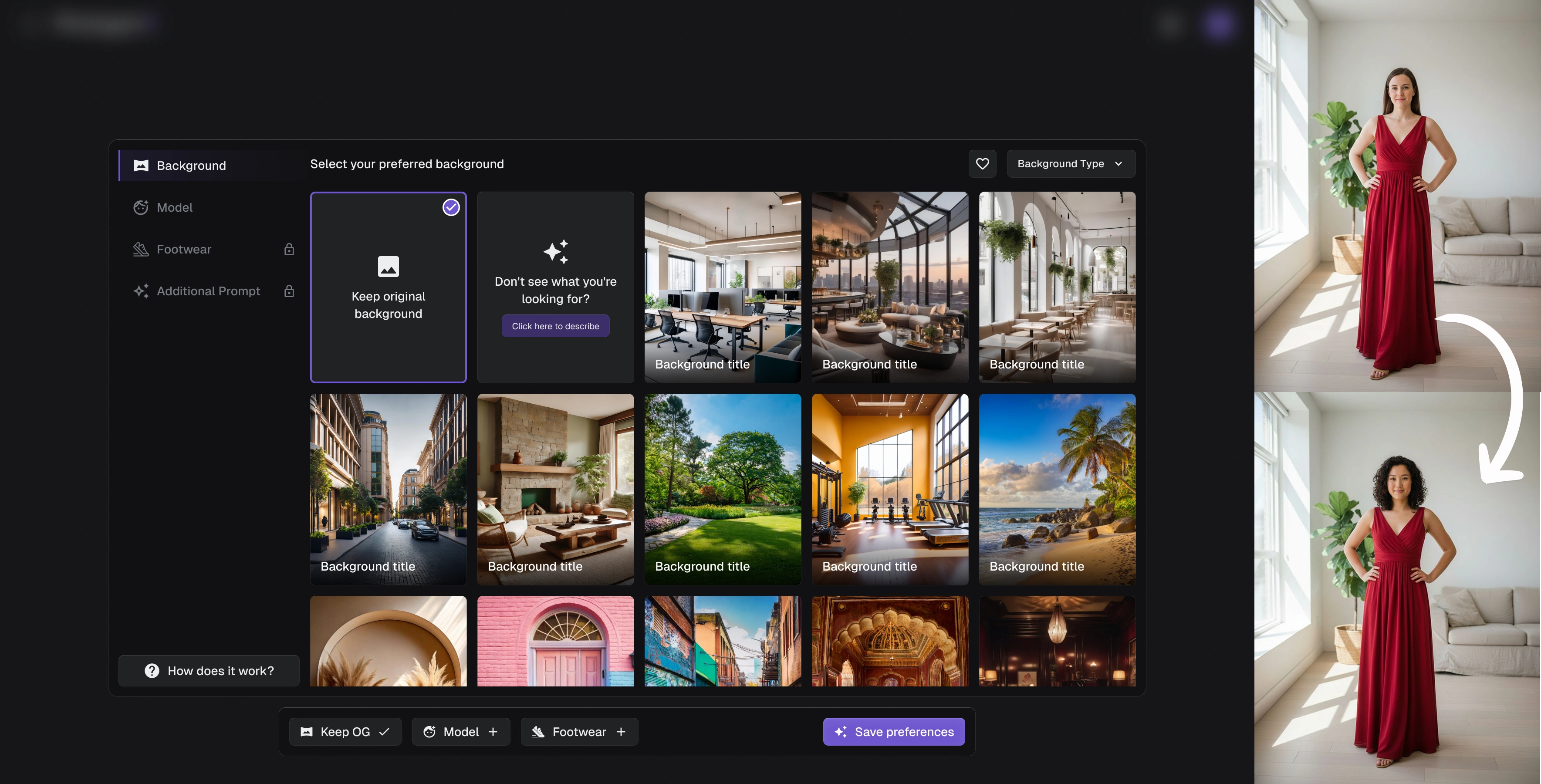

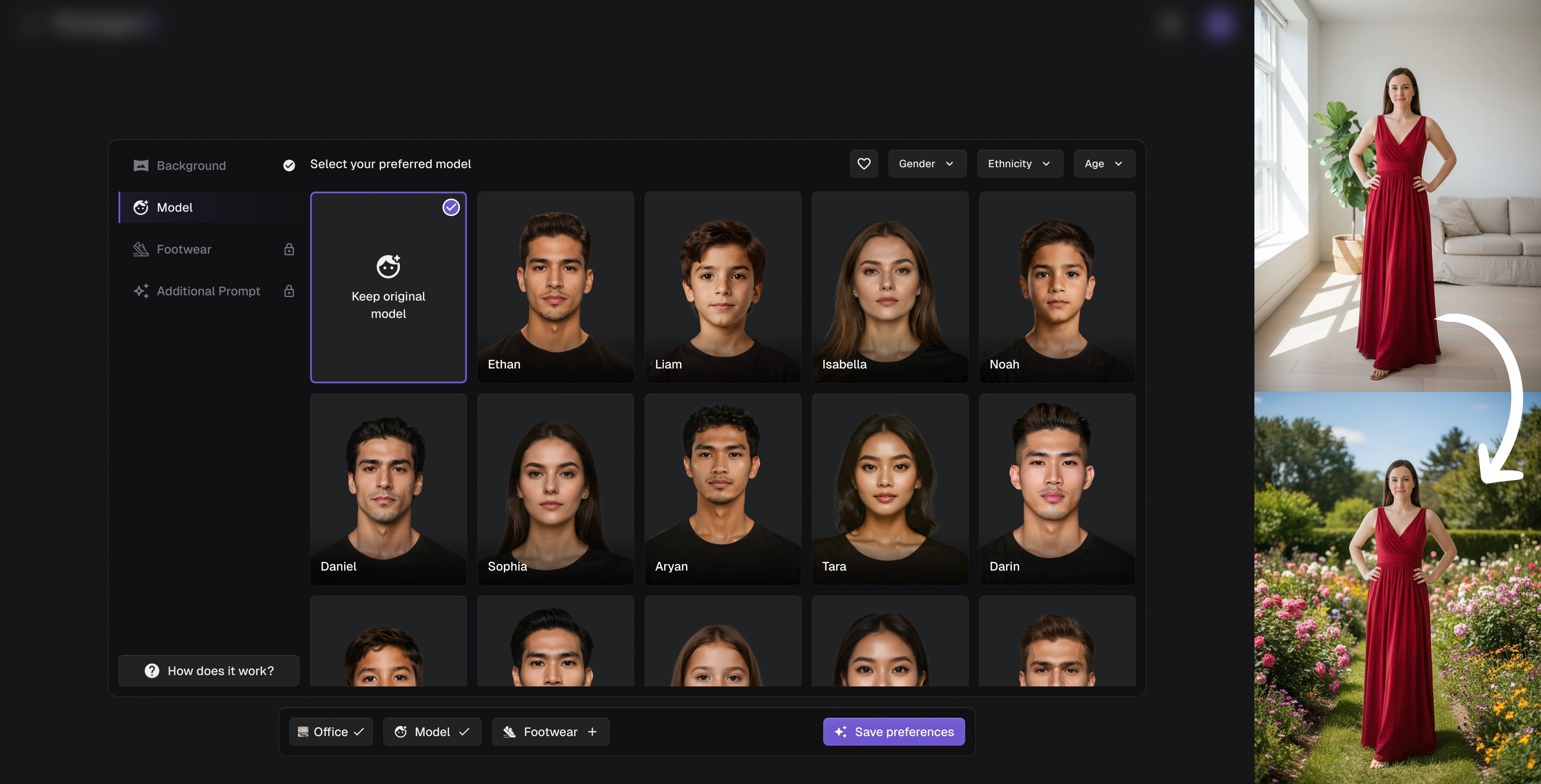

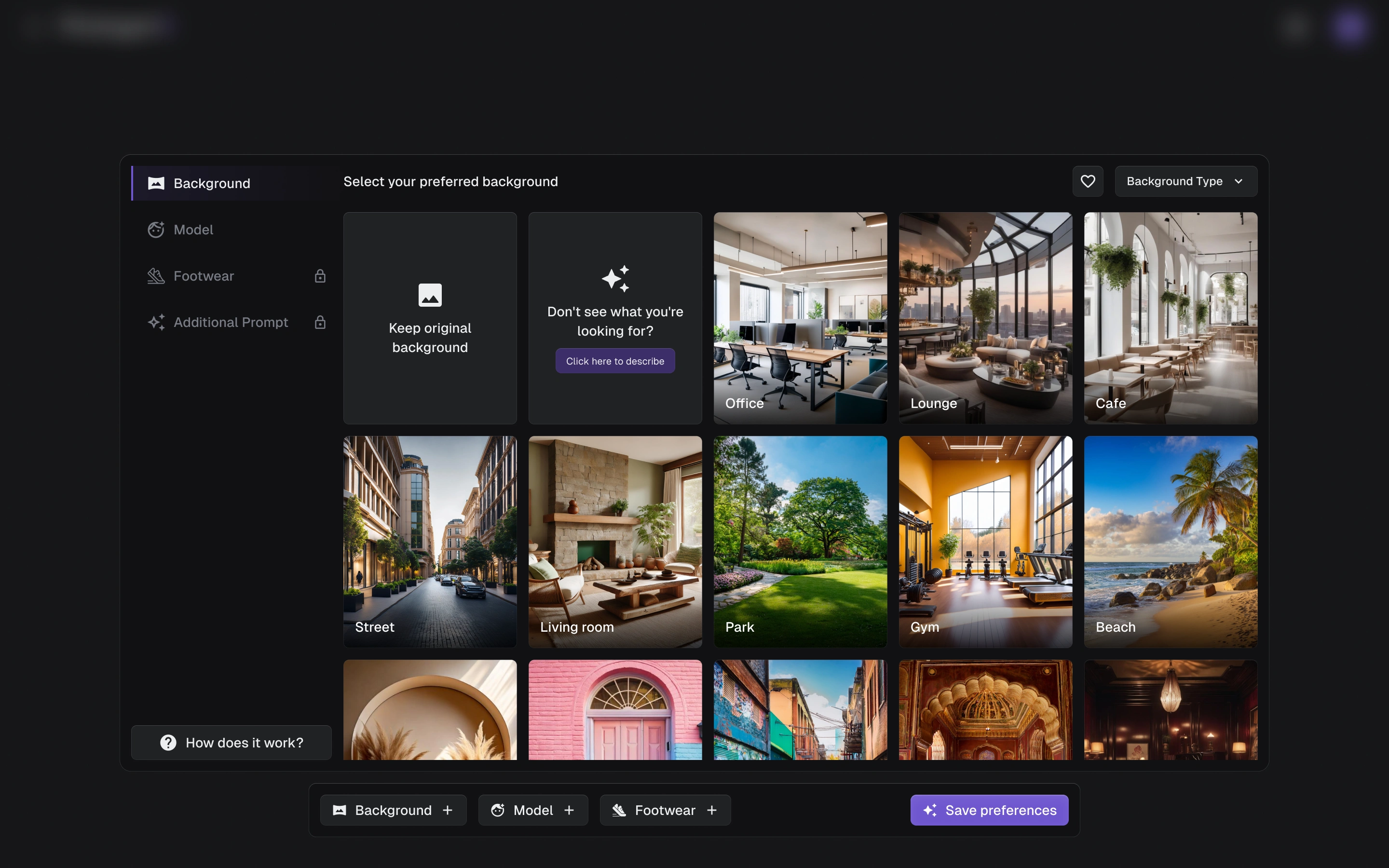

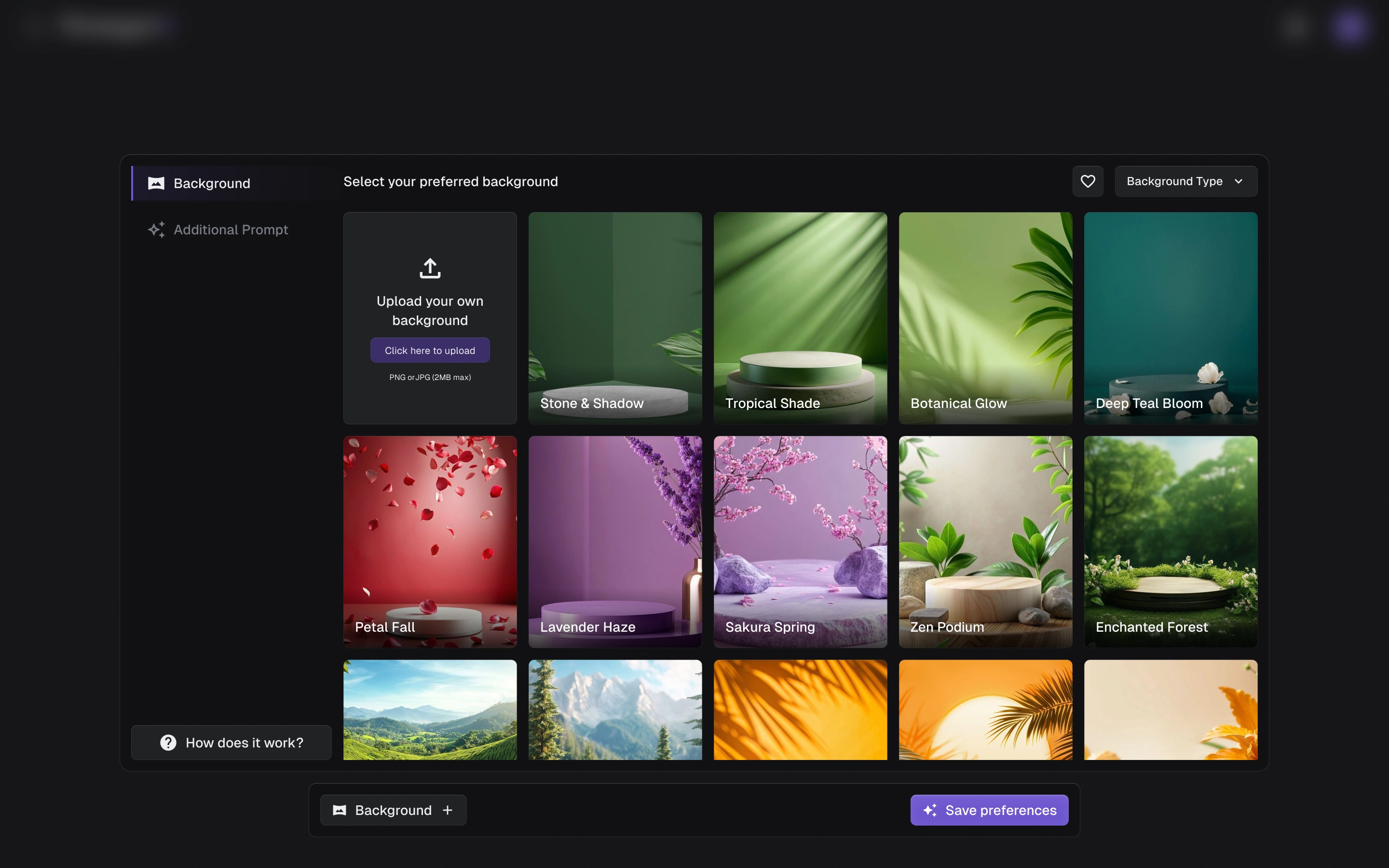

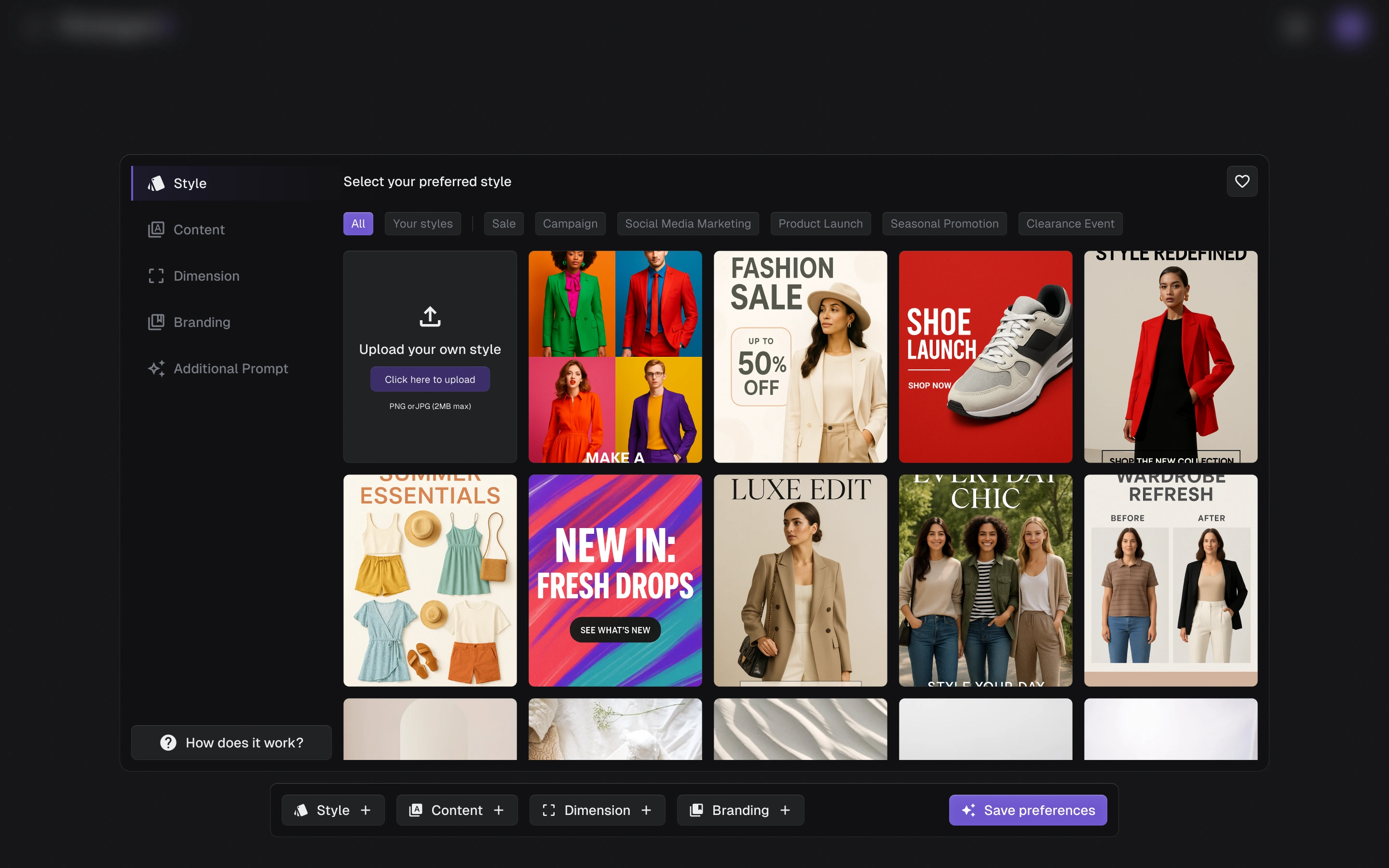

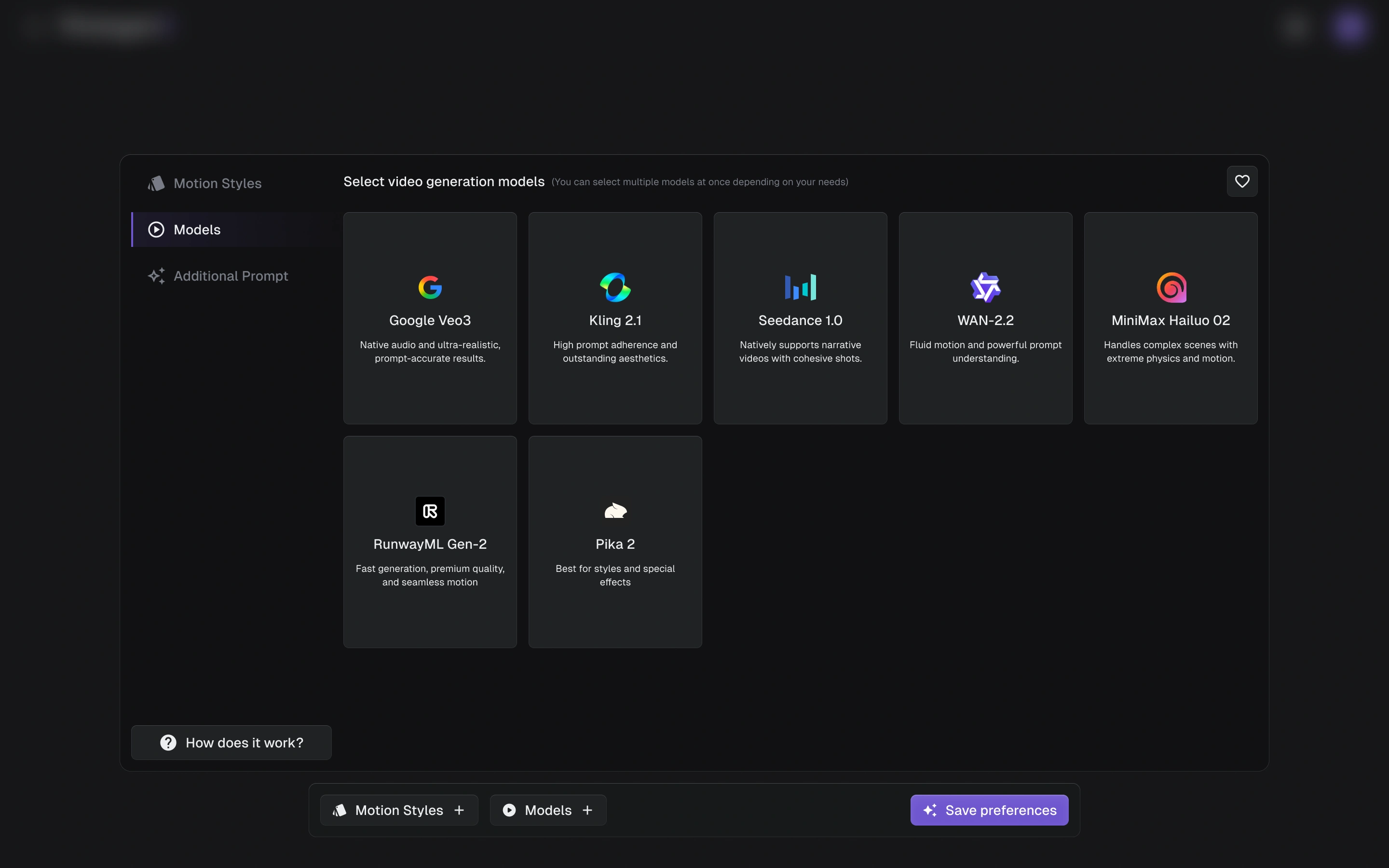

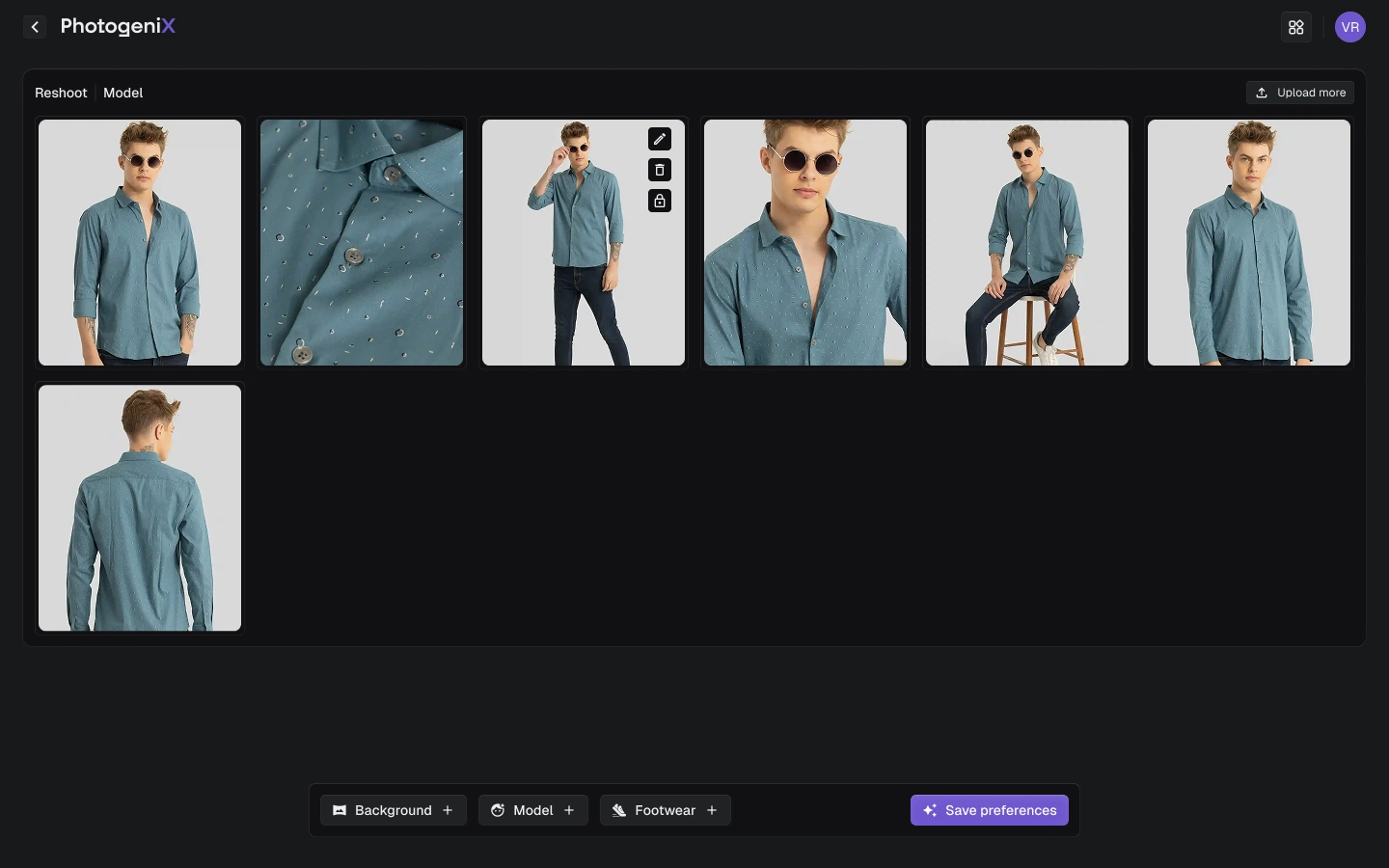

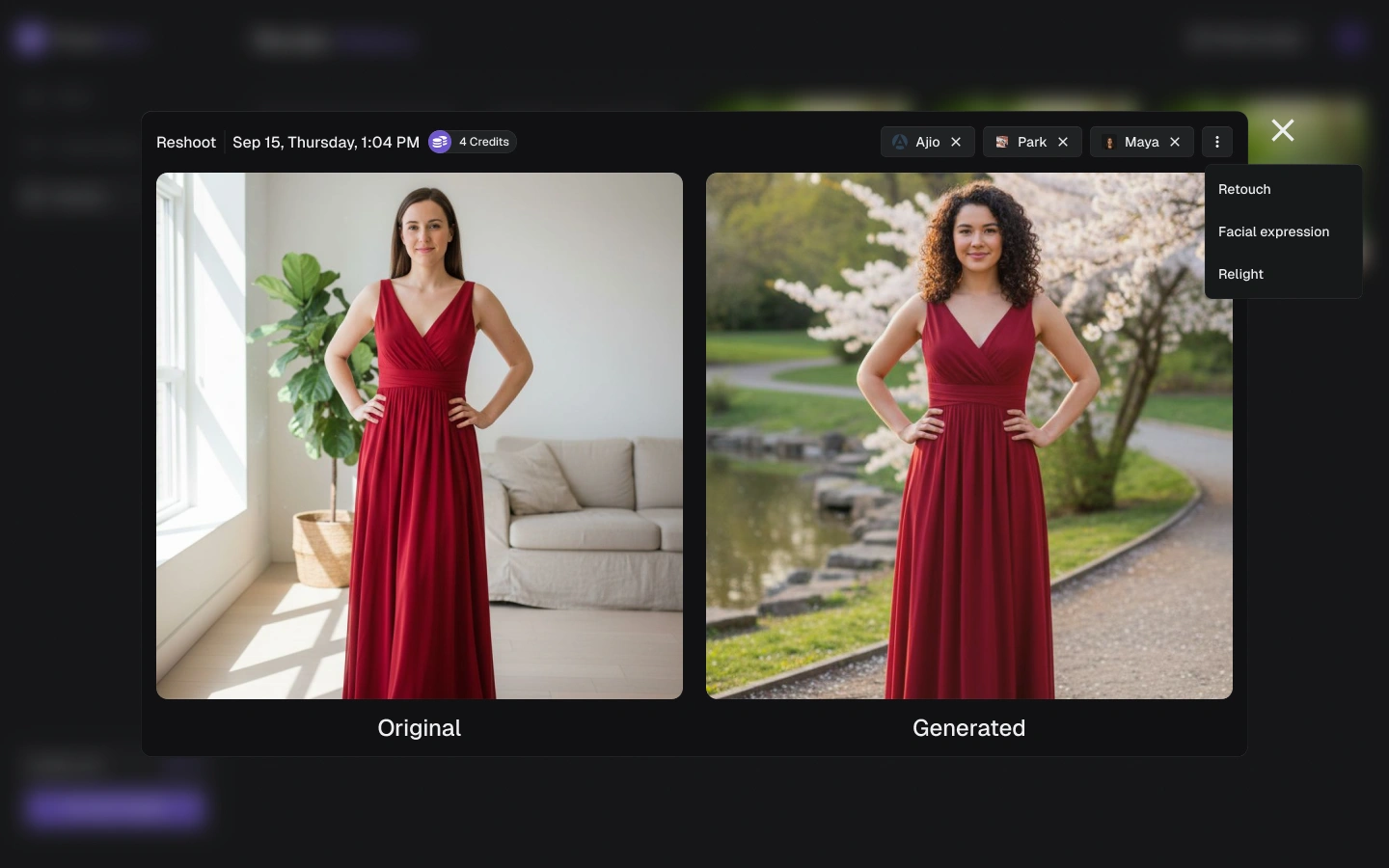

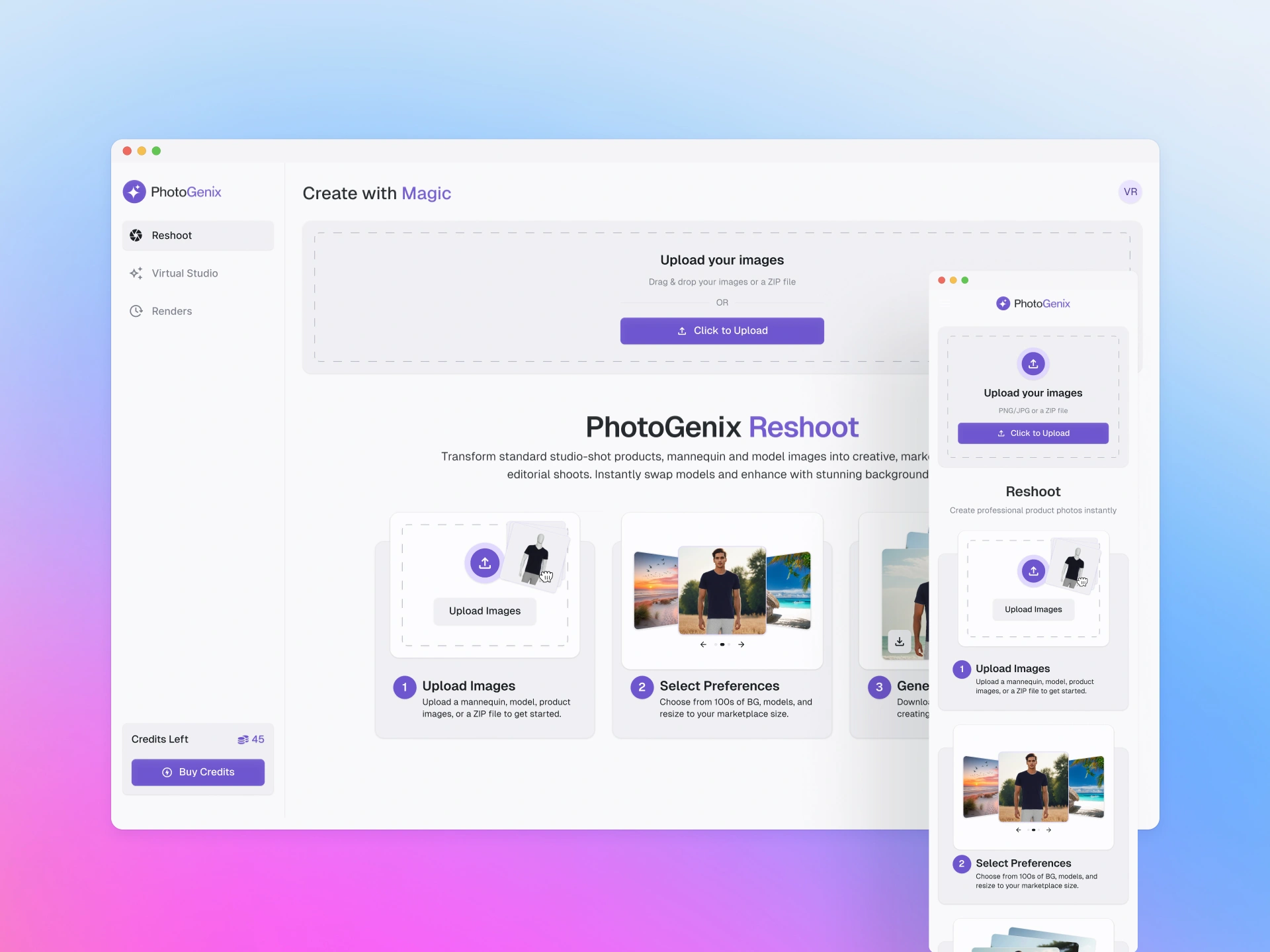

To address this, I helped design Photogenix, an AI-powered product imaging platform that helps fashion and retail brands generate production-ready visuals at scale. Initially spun out from a micro-feature in Catalogix (just with background change + marketplace resizing), and quickly evolved into a standalone platform with a broader set of capabilities.

I was involved from 0→1, identifying pain points, defining requirements, and shaping the solution from the ground up.

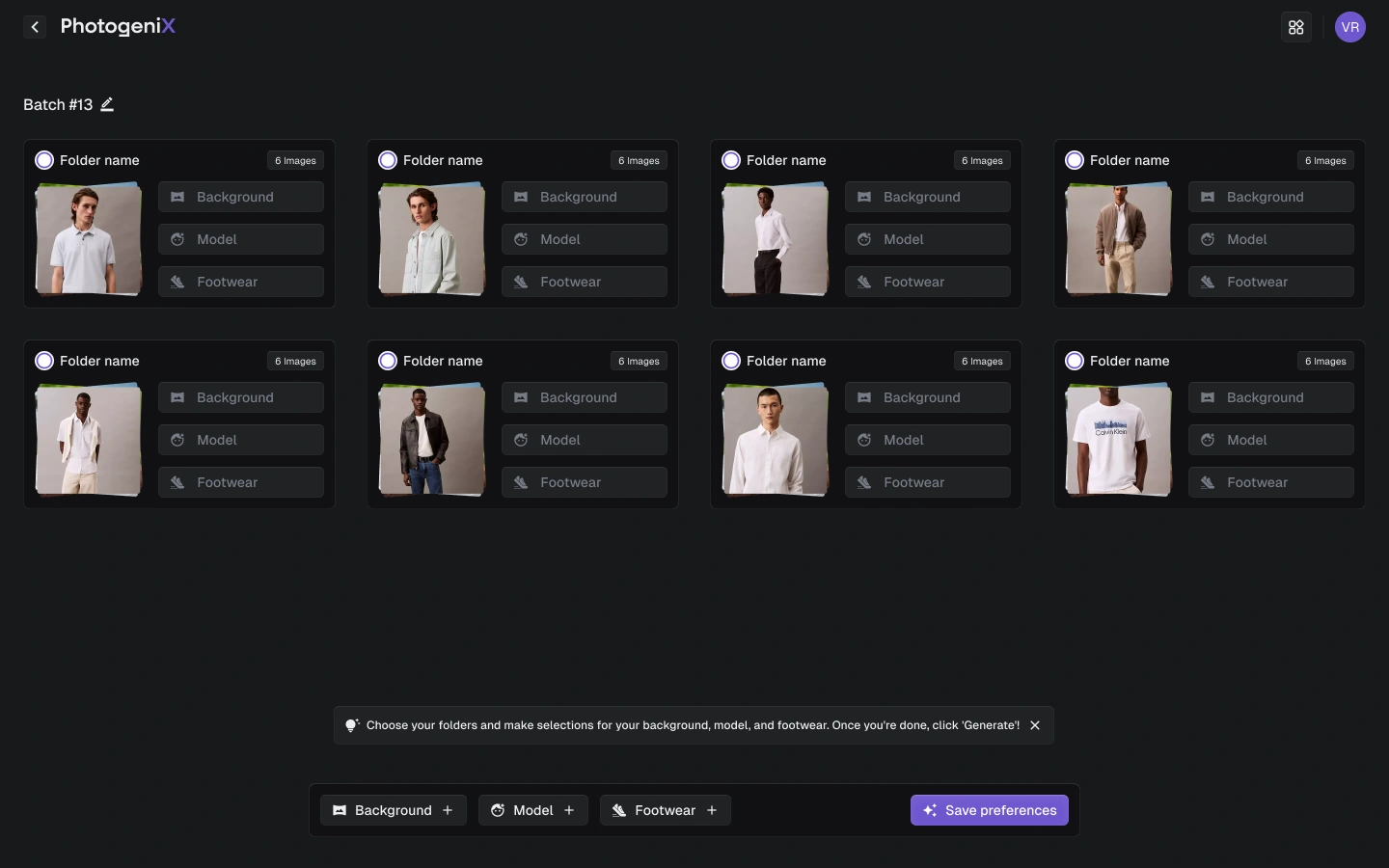

- Users upload a zip file with multiple folders, each representing a product or category.

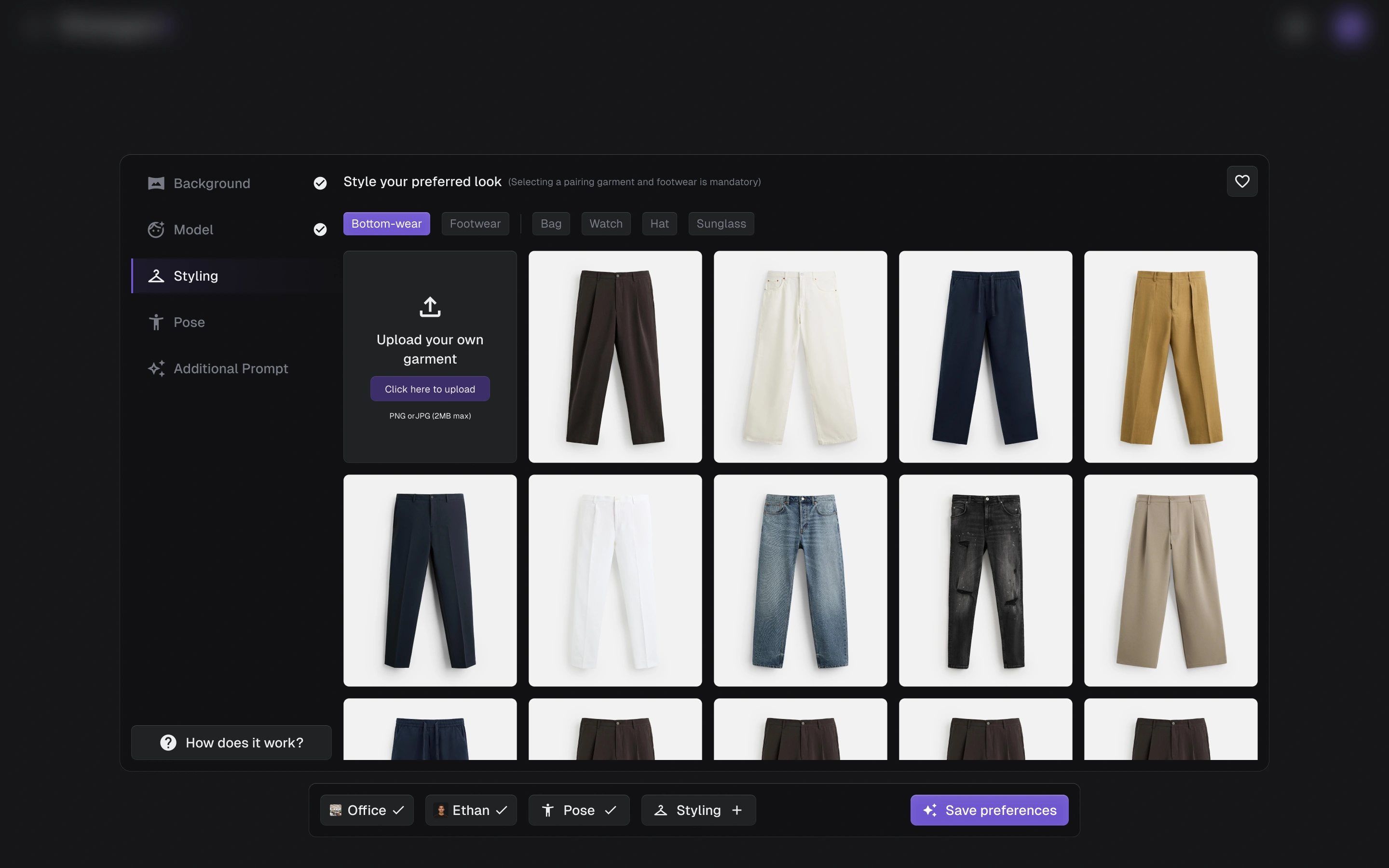

- Each folder can have custom settings (e.g., office look for shirts, street look for sneakers, minimal studio look for accessories).

- The system processes everything asynchronously in the background. Users are free to continue exploring the platform, and they get notified once assets are ready.

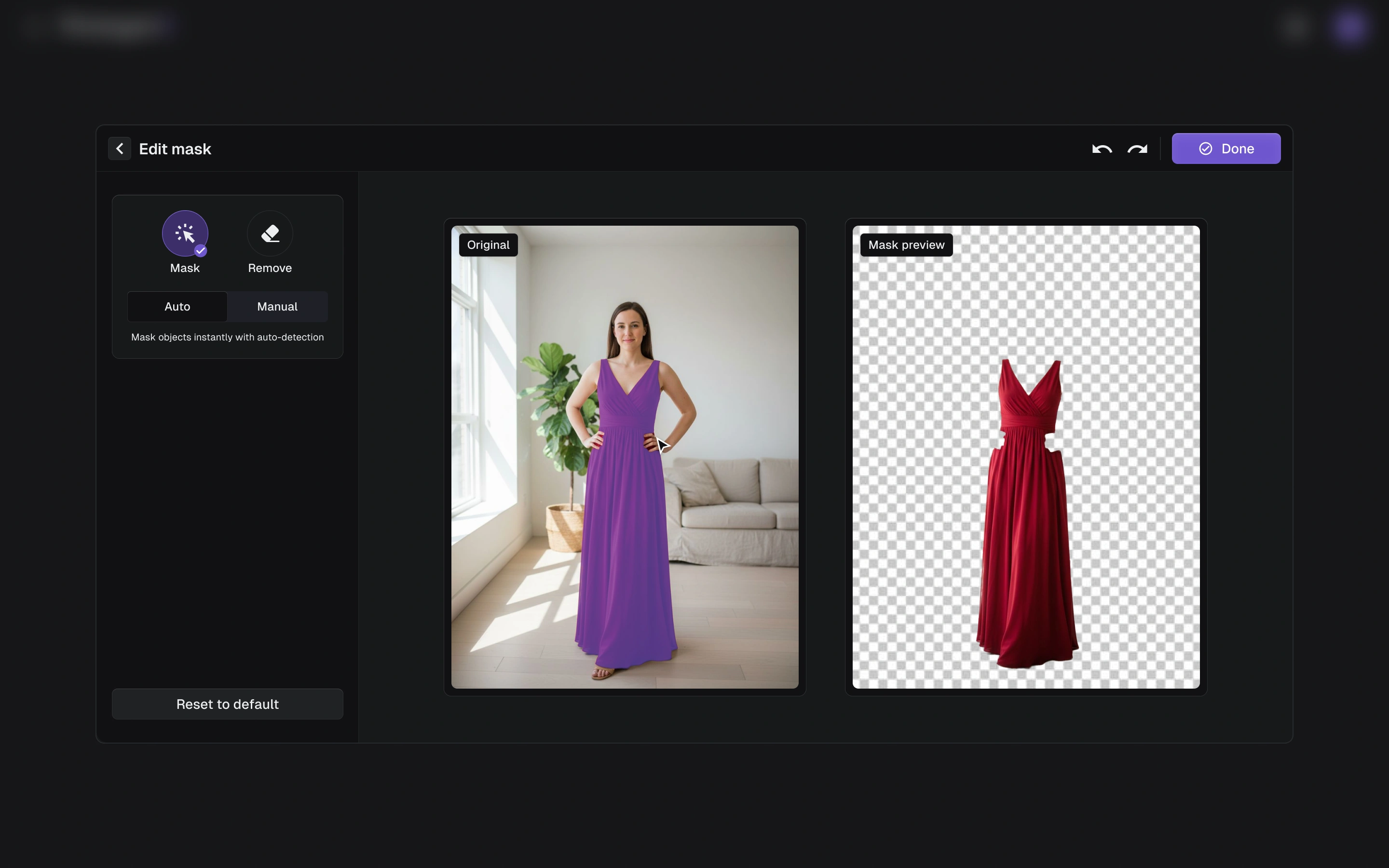

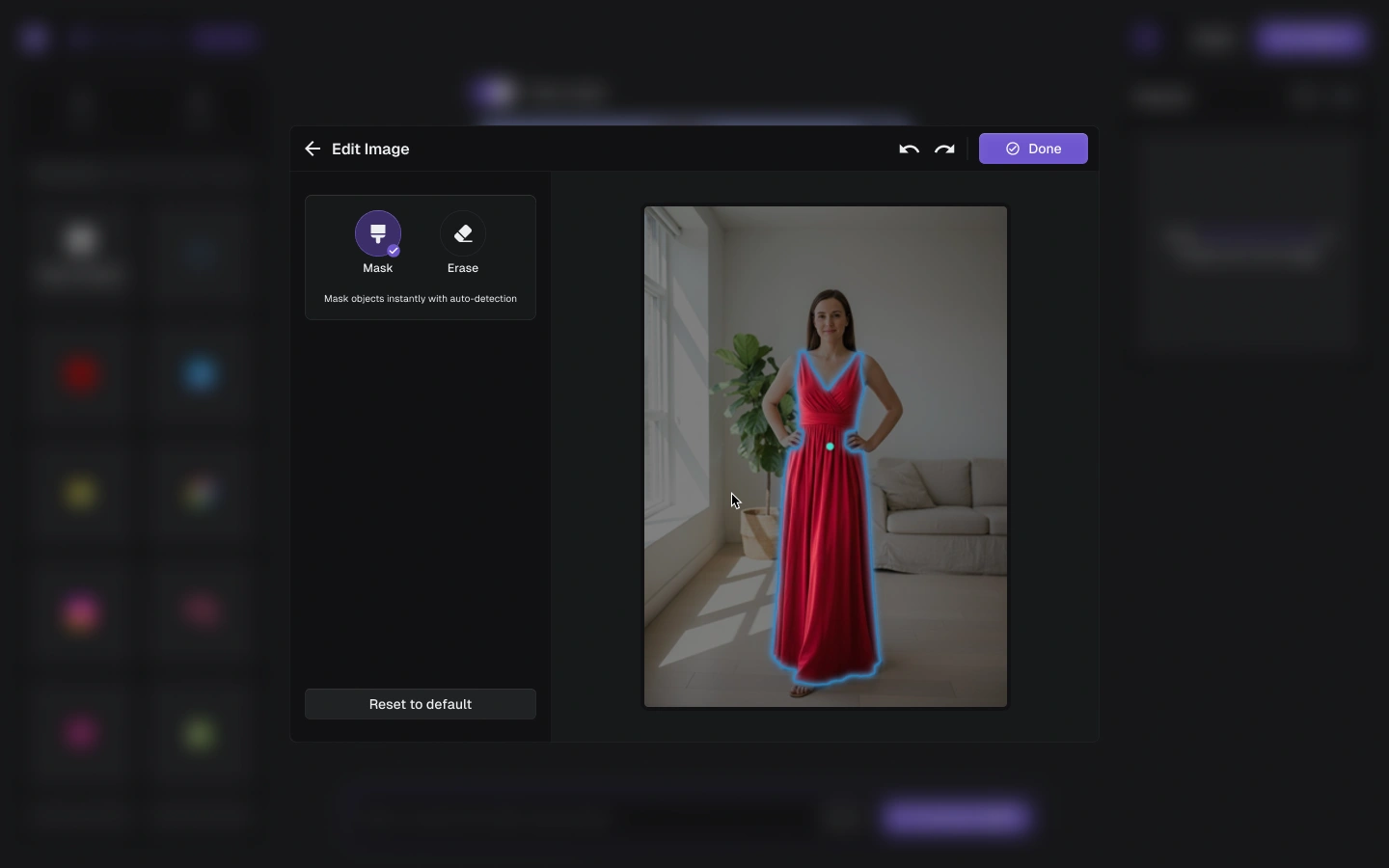

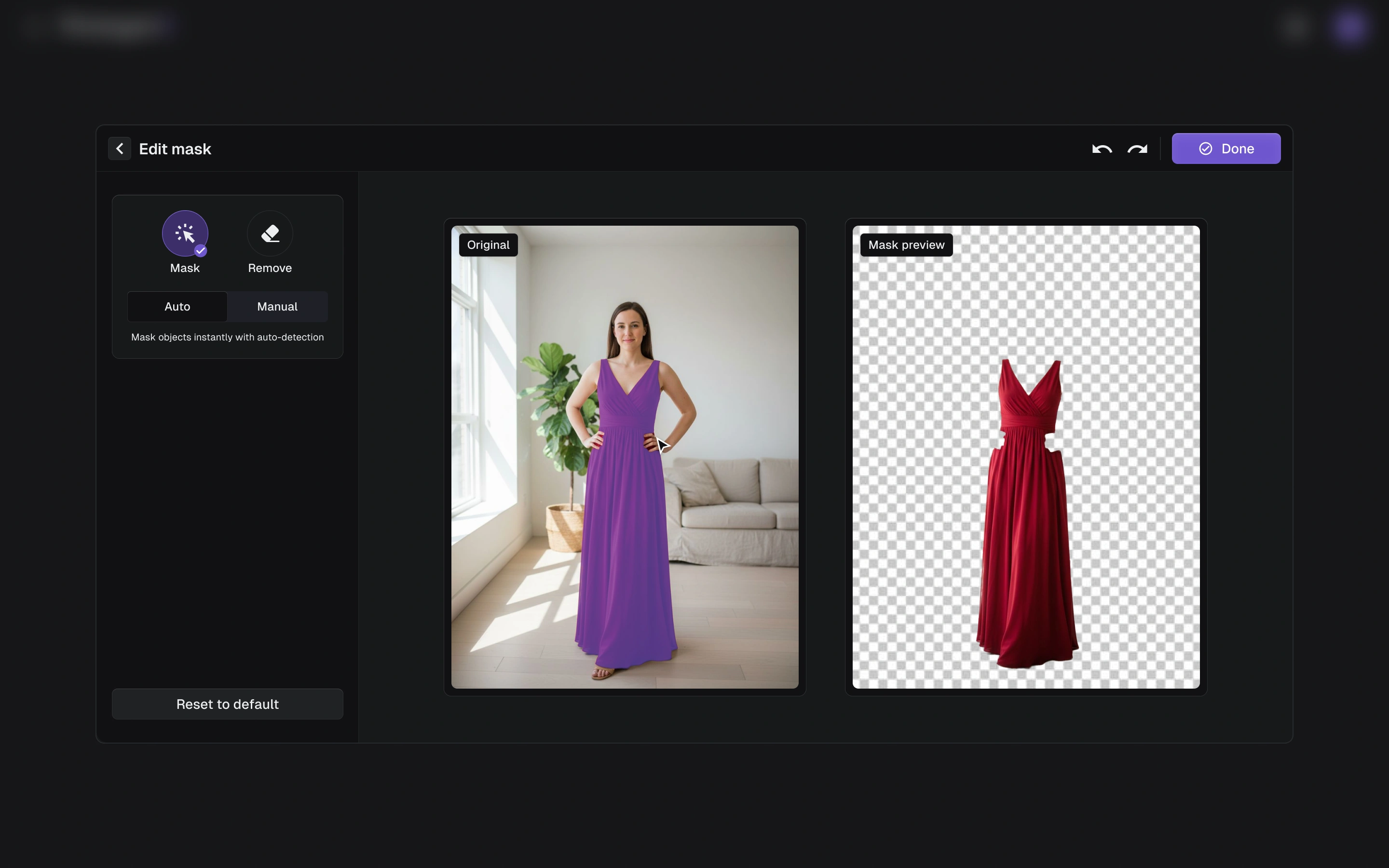

- Artifacts in complex areas (garments with fine textures, accessories not rendering correctly)

- Anatomical distortions (hands/fingers, a common SDXL limitation)

- Background blending issues (shadows, edges not harmonized)

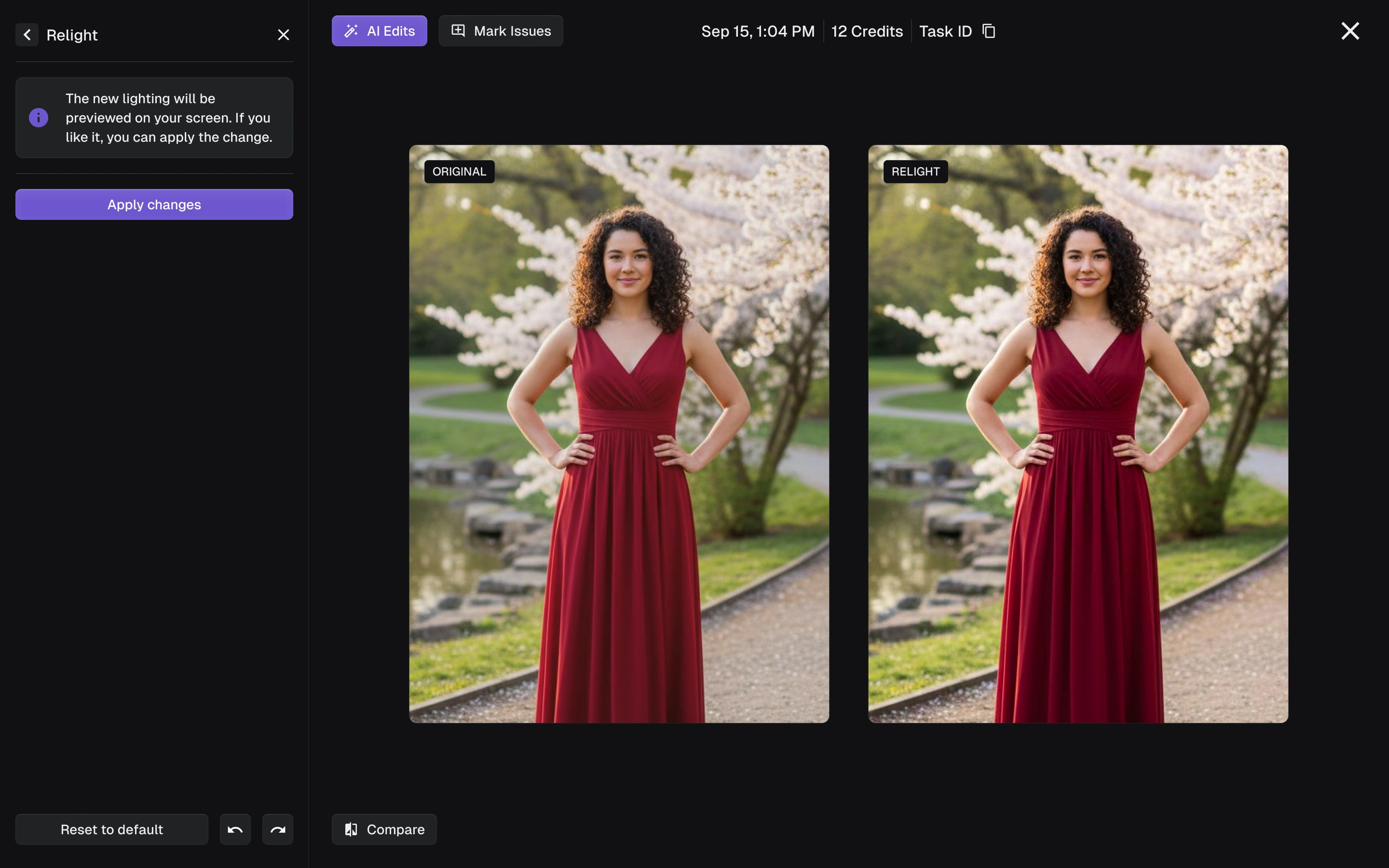

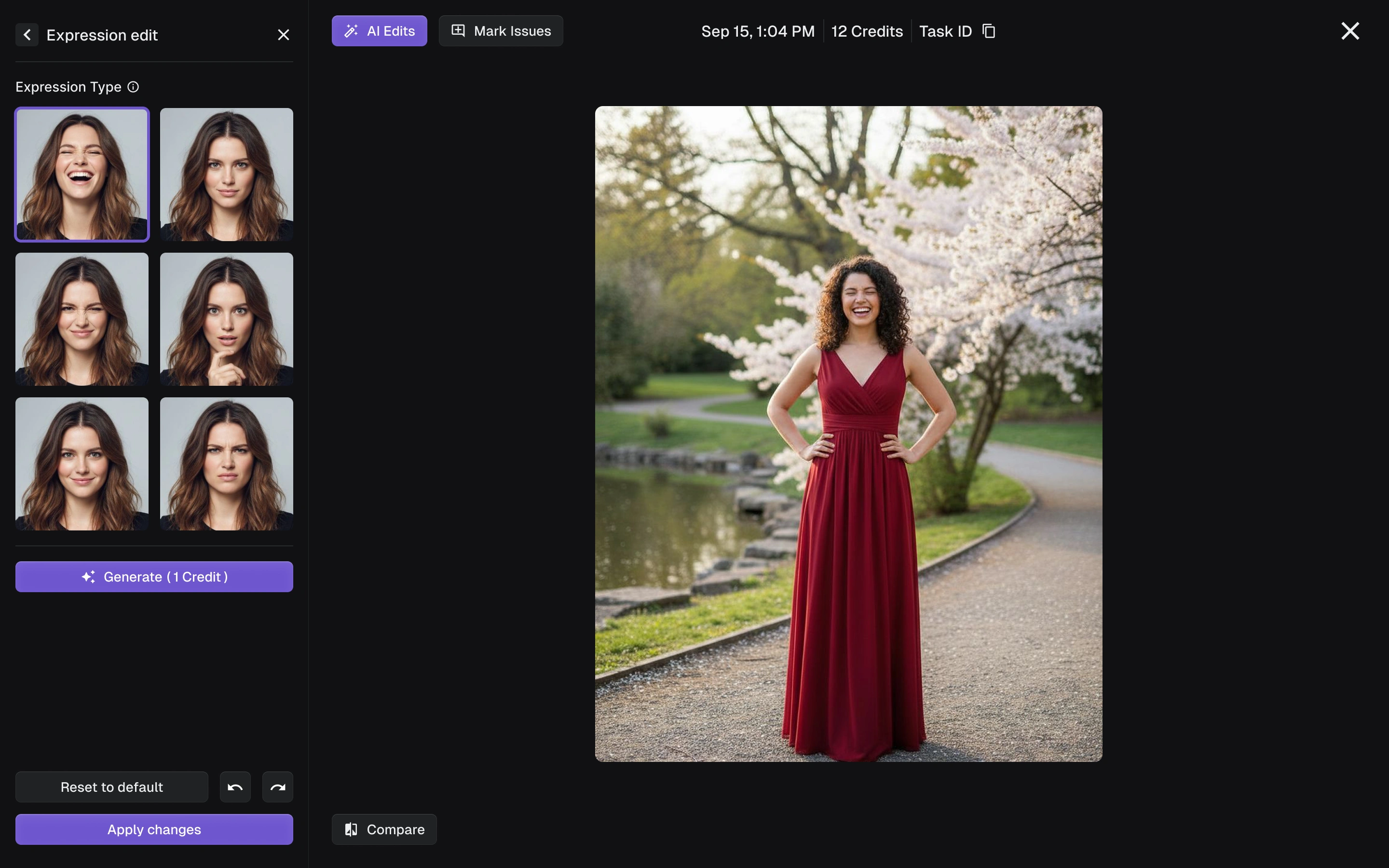

- Inconsistent lighting or mismatched expressions

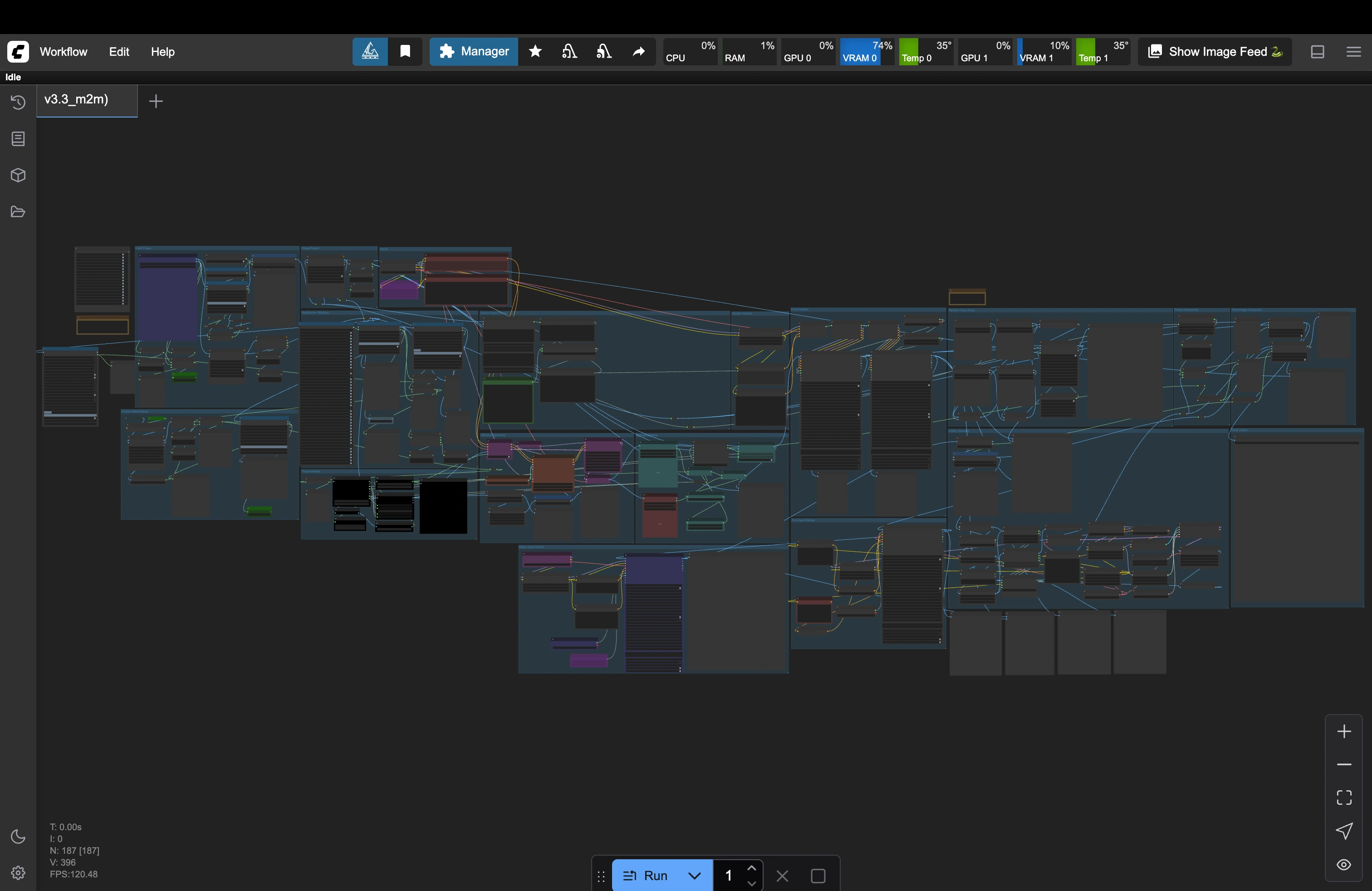

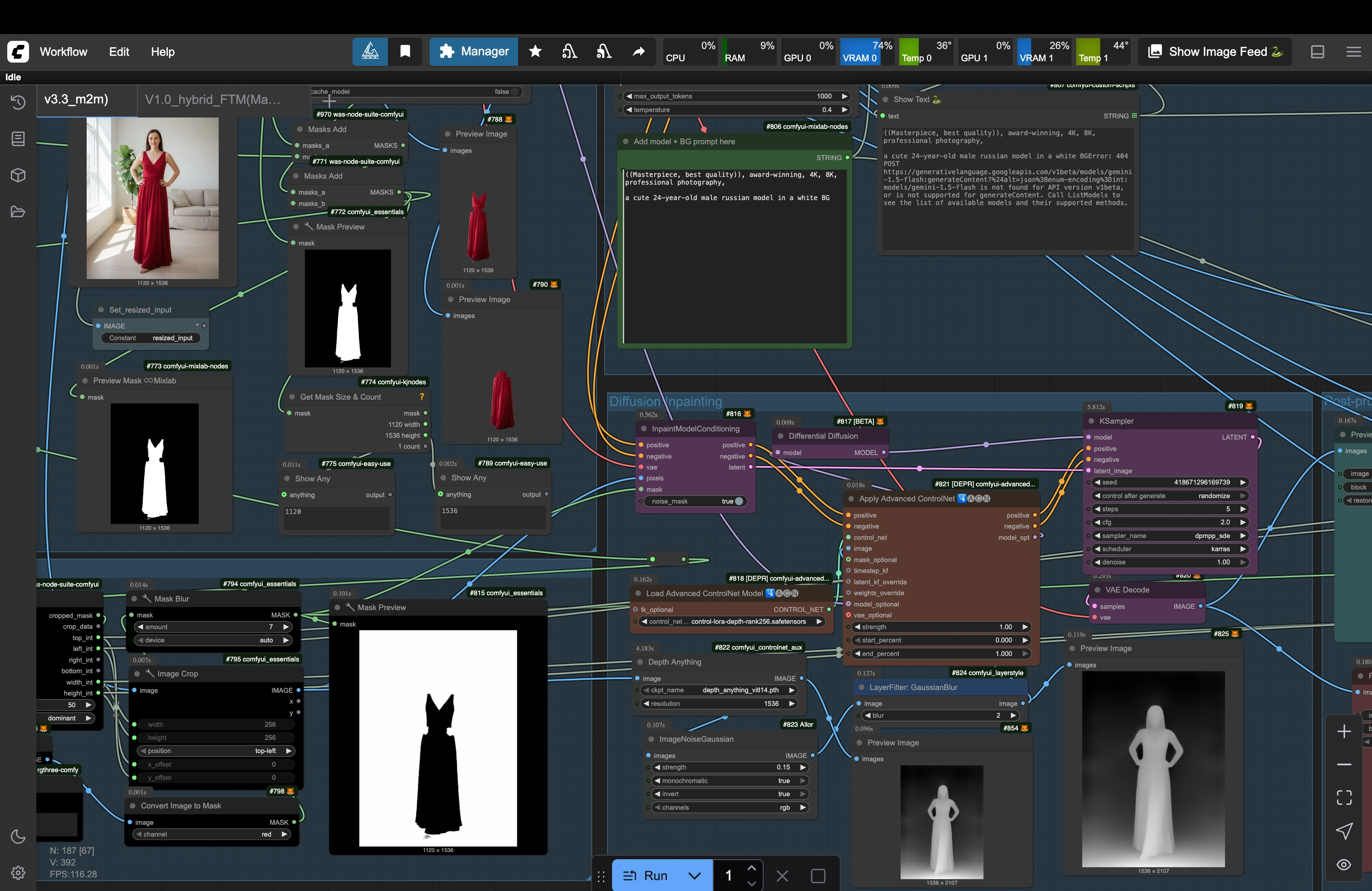

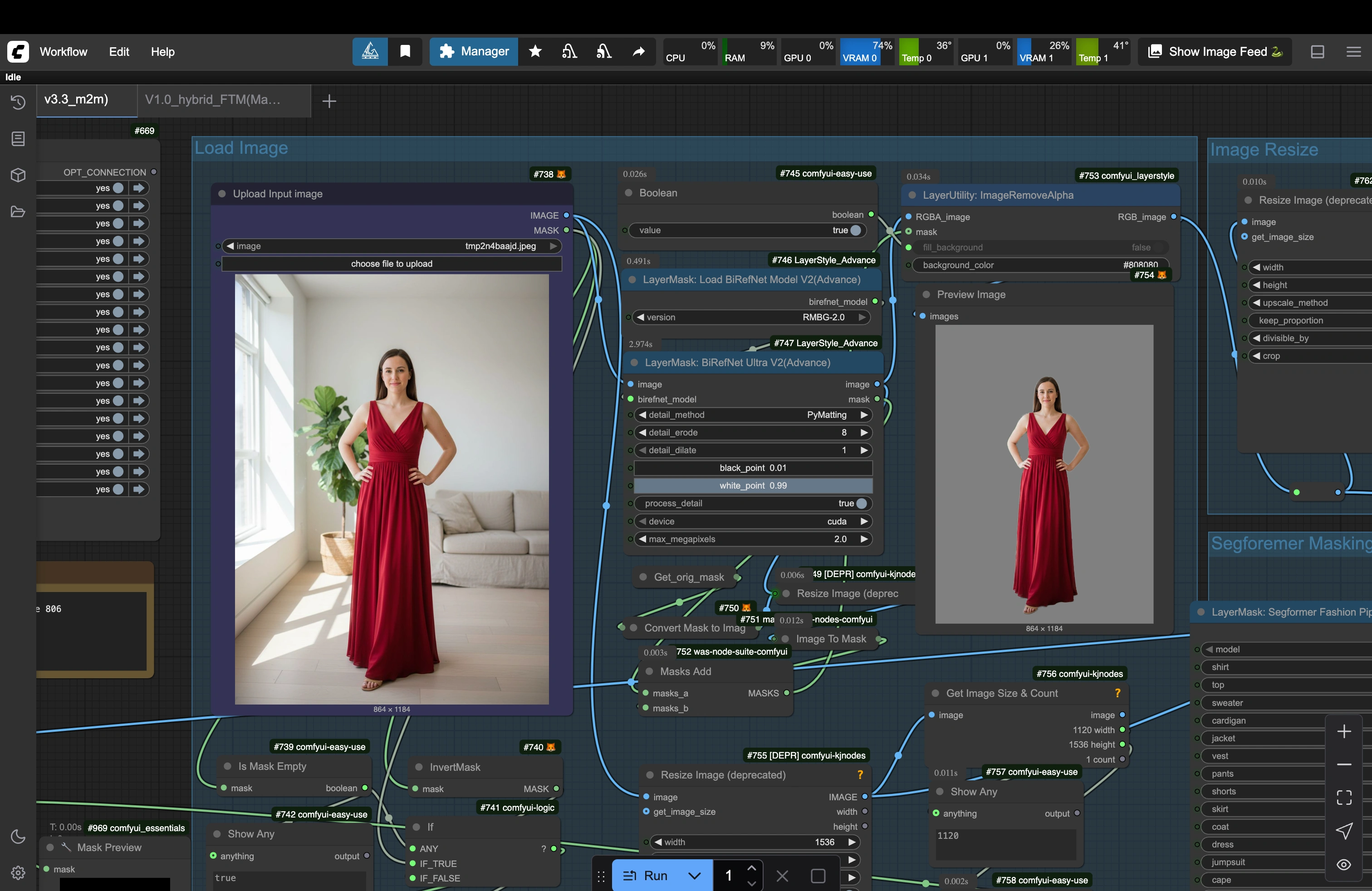

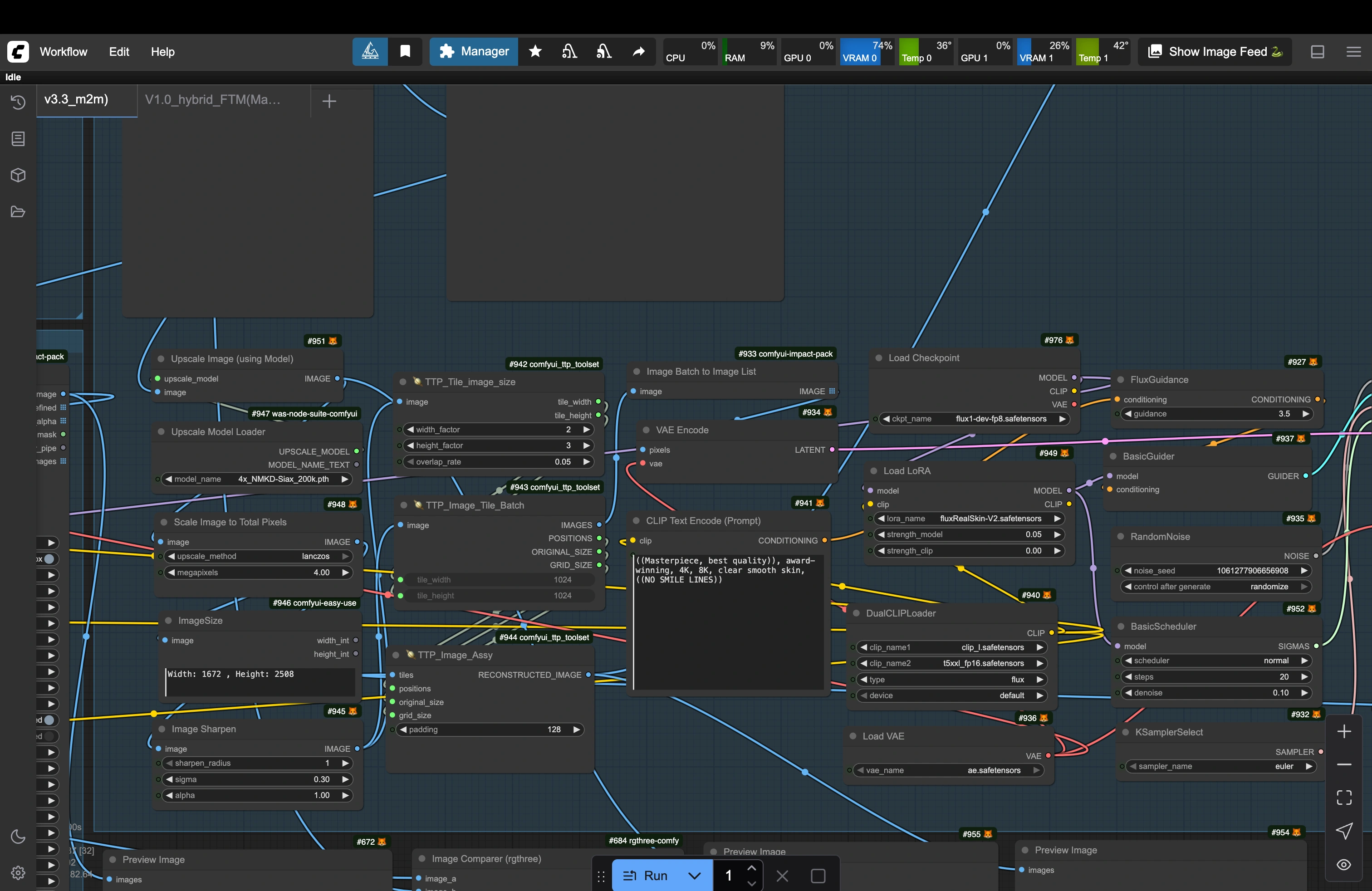

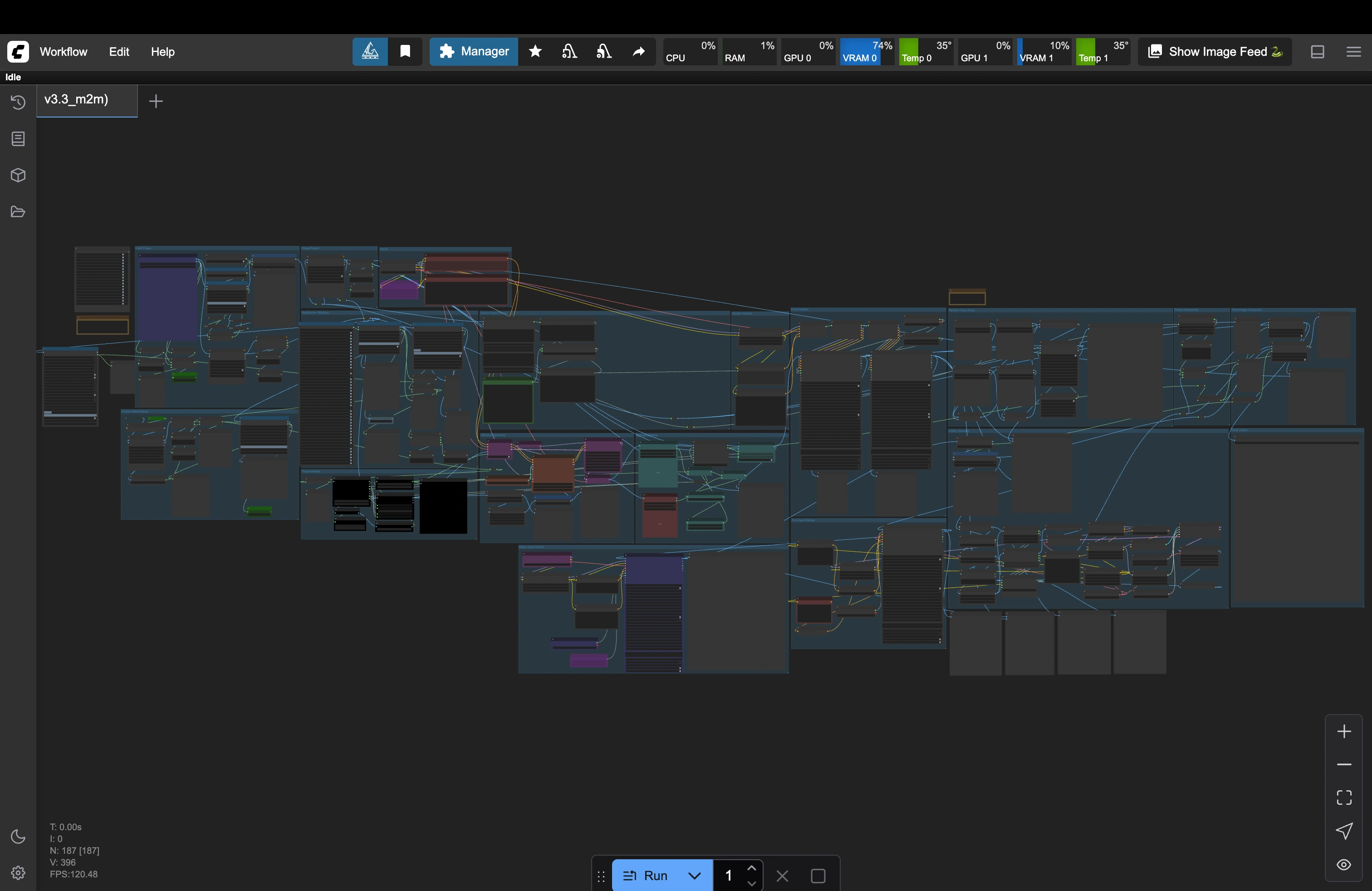

- Model-to-Model & Mannequin-to-Model workflows: Built early prototypes integrating Depth and Canny ControlNets to preserve pose and structure while maintaining stylistic flexibility. These prototypes provided a working foundation for our generative fashion pipeline.

- Continuous improvements: Iterated across multiple model updates, fine-tuning prompt conditioning, sampler settings, and ControlNet weights to improve realism, lighting consistency, and fabric detail.

- Bridging design & AI: This effort helped me translate design intent into technical workflows, ensuring that user-facing features (like mannequin-to-model conversion) were feasible and aligned with real model capabilities

Photogenix V1 Case Study

Explore the first version of PhotoGenix and see how our design and functionality have grown.

Click here to view